Facebook case demonstrates gaps in data

ownership laws

By Rep. Jim Langevin

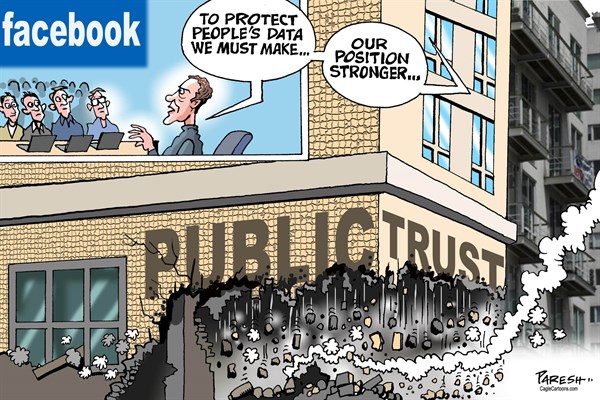

A recent survey indicated that users have little trust in

Facebook to follow privacy laws. Trust is the operative word.

A recent survey indicated that users have little trust in

Facebook to follow privacy laws. Trust is the operative word.

Privacy policies, account settings, and terms of use play a

larger role than any federal law in limiting the use of personal data beyond

health or financial information.

We extend a great deal of trust to a company when we give them

our personal information – trust that they will take care of our data and abide

by the contracts that govern our relationship.

But after three decades of explosive growth in data harvesting,

recent events make it clear that trust may be misplaced.

Facebook’s conduct with

the underhanded campaign consultancy firm, Cambridge Analytica, has laid bare

the limits of data protection law.

Facebook users are the victims in this case – yet the company

may only be liable under federal law if it also violated one of its written

contracts with users.

The innovations of the Information Age have outstripped the U.S. legal system’s protections for individual control over how our personal information is shared and used. It is time for that to change.

As the complexity of data

sharing increases, so does the possibility that our information will be used in

ways we never intended or authorized.

Take the Facebook case. I challenge anyone to find a single one

of the millions of affected users who provided information to Facebook with the

expectation that Cambridge Analytica would use it to develop “psychographic”

voting profiles for targeted political ads.

More transparency is essential for policymakers to fully grasp

the implications of this incident, and Facebook owes its users and shareholders

– both of which I am – a full accounting of its actions.

However, the available reporting is enough to provide a

framework to explore policy options for strengthening controls on data usage.

Facebook reportedly

learned that Cambridge Analytica had acquired millions of users’ profiles two

years ago.

At the time, Facebook sent letters to Cambridge Analytica and an

associated researcher insisting that they delete the information.

However, two important things did not happen:

Facebook did not positively verify disposal of the data through

an audit, and no individuals were notified that their private information had

been used in a way they had not authorized.

There were no federal requirements that either happen, just

trust in the parties involved. Having seen that trust doubly betrayed, we may

need new law to impose rigorous notification and disposal requirements when

users’ data is shared improperly.

Facebook has stated that

it was a violation of their agreements with Aleksandr Kogan, the Cambridge University

researcher who initially collected the data, for him to sell or license it to

Cambridge Analytica.

This defense misses the point that granting unfettered access to

raw data makes it technically and legally difficult to enforce limitations on

data usage and sharing.

Facebook extended trust to the researcher, on behalf of its

users and without their knowledge, that the data would be used and protected in

accordance with its terms.

Those terms also allowed apps like the researcher used to

collect data not only about users who explicitly authorized the app to do so,

but also about their friends. While Facebook revoked that policy in 2014, there

remains no legal requirement that users directly consent to sharing.

Finally, central to this

case is the data that the affected users gave to Facebook in the first place.

As a condition of joining the social network, users were required to agree to a

privacy policy – whether or not they read and understood it – and could only

modify the privacy settings Facebook chose to make available.

As remarkable as it may sound, this is standard practice. The

companies we do business with decide what they can do with our data and what

control over those uses they offer to us; we don’t get to choose.

Our only alternative is not to use a service at all, and that is

less and less of an option in our Internet-enabled economy. Congress could

change the law to require that companies give users granular control of their

data and codify the right to know how, when and with whom that data is shared.

As long as data sharing

adheres to published terms of use, the law does not prohibit most companies

from selling or licensing access to your data, for virtually any purpose or

duration, without notice to you.

They have no obligation to verify that recipients of your data

are not abusing it. Without laws to the contrary, we are left to trust service

providers that our data will not be misused, misplaced, or misappropriated.

Facebook violated that trust, and Congress must take action to

update the law to put control of digital identities in more trusted hands – our

own.

Rep. Langevin represents Rhode Island's 2nd District. He

is co-chair of the Congressional Cybersecurity Caucus.