DARPA and the Navy funded this project

By ADAM ZEWE, MASSACHUSETTS

INSTITUTE OF TECHNOLOGY

|

| A new technique could enable a robot to manipulate squishy objects like pizza dough or soft materials like clothing. |

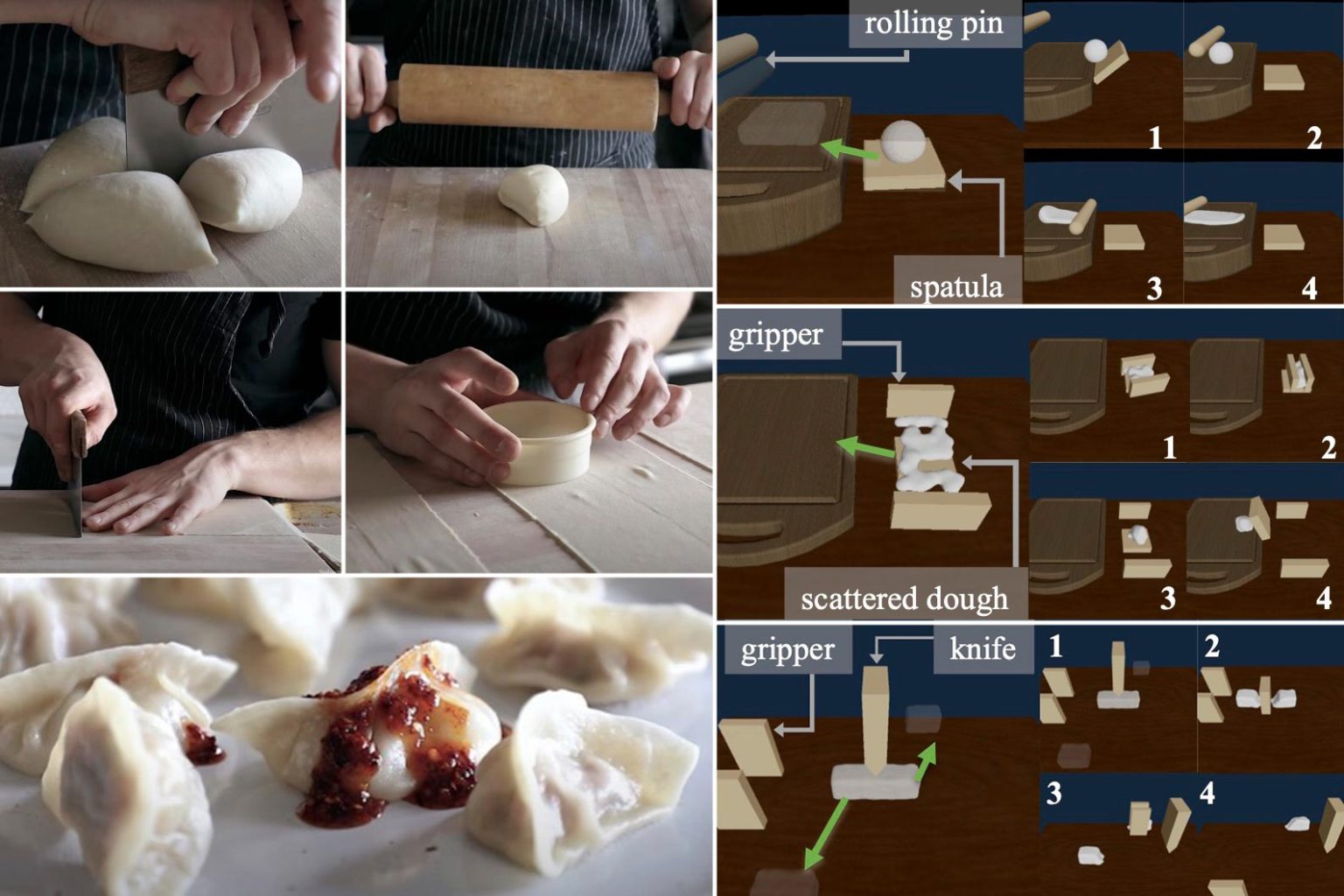

Imagine a pizza maker working with a ball of dough. She might use a spatula to lift the dough onto a cutting board then use a rolling pin to flatten it into a circle.

Easy, right? Not if this pizza maker is a robot.

For a robot, working with a deformable object like dough is tricky because the shape of dough can change in many ways, which are difficult to represent with an equation.

Plus, creating a new shape out of that dough requires multiple steps and the use of different tools.

It is especially

difficult for a robot to learn a manipulation task with a long sequence of

steps — where there are many possible choices — since learning often occurs

through trial and error.

Researchers at MIT, Carnegie Mellon University, and the University of California at San Diego, have come up with a better way. They created a framework for a robotic manipulation system that uses a two-stage learning process, which could enable a robot to perform complex dough-manipulation tasks over a long timeframe.

A “teacher” algorithm

solves each step the robot must take to complete the task. Then, it trains a

“student” machine-learning model that learns abstract ideas about when and how

to execute each skill it needs during the task, like using a rolling pin. With

this knowledge, the system reasons about how to execute the skills to complete

the entire task.

The researchers show that this method, which they call DiffSkill,

can perform complex manipulation tasks in simulations, like cutting and

spreading dough, or gathering pieces of dough from around a cutting board,

while outperforming other machine-learning methods.

Beyond pizza-making, this method could be applied in other settings where a robot needs to manipulate deformable objects, such as a caregiving robot that feeds, bathes, or dresses someone elderly or with motor impairments.

“This method is closer to how we as humans plan our actions. When

a human does a long-horizon task, we are not writing down all the details. We

have a higher-level planner that roughly tells us what the stages are and some

of the intermediate goals we need to achieve along the way, and then we execute

them,” says Yunzhu Li, a graduate student in the Computer Science and

Artificial Intelligence Laboratory (CSAIL), and author of a paper presenting

DiffSkill.

Student and teacher

The “teacher” in the DiffSkill framework is a trajectory

optimization algorithm that can solve short-horizon tasks, where an object’s

initial state and target location are close together. The trajectory optimizer

works in a simulator that models the physics of the real world (known as a

differentiable physics simulator, which puts the “Diff” in “DiffSkill”). The

“teacher” algorithm uses the information in the simulator to learn how the

dough must move at each stage, one at a time, and then outputs those trajectories.

Then the “student” neural network learns to imitate the actions

of the teacher. As inputs, it uses two camera images, one showing the dough in

its current state and another showing the dough at the end of the task. The

neural network generates a high-level plan to determine how to link different

skills to reach the goal. It then generates specific, short-horizon

trajectories for each skill and sends commands directly to the tools.

The researchers used this technique to experiment with three

different simulated dough-manipulation tasks. In one task, the robot uses a

spatula to lift dough onto a cutting board then uses a rolling pin to flatten

it. In another, the robot uses a gripper to gather dough from all over the

counter, places it on a spatula, and transfers it to a cutting board. In the

third task, the robot cuts a pile of dough in half using a knife and then uses

a gripper to transport each piece to different locations.

DiffSkill was able to outperform popular techniques that rely on

reinforcement learning, where a robot learns a task through trial and error. In

fact, DiffSkill was the only method that was able to successfully complete all

three dough manipulation tasks. Interestingly, the researchers found that the

“student” neural network was even able to outperform the “teacher” algorithm,

Lin says.

“Our framework provides a novel way for robots to acquire new

skills. These skills can then be chained to solve more complex tasks which are

beyond the capability of previous robot systems,” says Lin.

Because their method focuses on controlling the tools (spatula,

knife, rolling pin, etc.) it could be applied to different robots, but only if

they use the specific tools the researchers defined. In the future, they plan

to integrate the shape of a tool into the reasoning of the “student” network so

it could be applied to other equipment.

The researchers intend to improve the performance of DiffSkill by

using 3D data as inputs, instead of images that can be difficult to transfer

from simulation to the real world. They also want to make the neural network

planning process more efficient and collect more diverse training data to

enhance DiffSkill’s ability to generalize to new situations. In the long run,

they hope to apply DiffSkill to more diverse tasks, including cloth

manipulation.

Reference: “Diffskill: Skill Abstraction From Differentiable

Physics for Deformable Object Manipulations With Tools” by Xingyu Lin, Zhiao

Huang, Yunzhu Li, Joshua B. Tenenbaum, David Held and Chuang Gan.

OpenReview

This work is supported, in part, by the National Science

Foundation, LG Electronics, the MIT-IBM Watson AI Lab, the Office of Naval

Research, and the Defense Advanced Research Projects Agency Machine Common

Sense program.